Quantum computing represents an extraordinary leap forward in our ability to solve complex problems. Unlike classical computers that use bits to represent data as either 0s or 1s, quantum computers employ qubits, which can exist in multiple states simultaneously due to a property called superposition.

Background

Quantum computing represents a paradigm shift in computational technology, leveraging the strange and fascinating principles of quantum mechanics to perform calculations far beyond the capabilities of classical computers. Unlike traditional bits, which exist strictly as 0s or 1s, quantum bits or qubits can exist in superpositions, dramatically increasing processing power and efficiency. This breakthrough traces its roots to foundational discoveries in quantum mechanics during the early 20th century, including phenomena such as superposition, entanglement, and quantum tunneling.

The development of quantum computing has been a multidisciplinary effort, encompassing fields such as physics, computer science, mathematics, and engineering. Key breakthroughs in quantum algorithms—such as Shor’s algorithm for factoring large numbers and Grover’s algorithm for database searching—highlight the unique capabilities of quantum systems. These algorithms promise exponential speedups in certain computations, paving the way for innovations in cryptography, optimization, and simulation.

Geography

Research and development centers dedicated to quantum technology are distributed globally, with major hubs in the United States, China, the European Union, and Canada. Quantum hardware facilities are often situated in secure, controlled environments to mitigate the delicate nature of qubits and maintain coherence. Countries like China have invested heavily in quantum communications infrastructure, aiming to establish unbreakable security standards, whereas the US and Europe are focused on developing scalable quantum processors.

Society & Culture

The cultural perception of quantum computing is deeply intertwined with notions of futuristic innovation and scientific mastery. It influences popular media, education, and advocacy for scientific literacy. As quantum technology moves closer to practical deployment, societal discussions focus on ethics, privacy, and the potential disruption of existing industries. The fascination with quantum mechanics also permeates philosophical debates about determinism, reality, and the limits of human understanding.

Economy & Trade

The economic implications are vast, with quantum computing poised to revolutionize sectors such as finance, pharmaceuticals, logistics, and cybersecurity. Companies investing in quantum hardware and algorithms are seen as gaining a strategic advantage, creating a new landscape of trade and competition. National governments are subsidizing research to secure leadership in this emerging technological battlefield, with potential for lucrative commercial applications and intellectual property rights.

Military & Technology

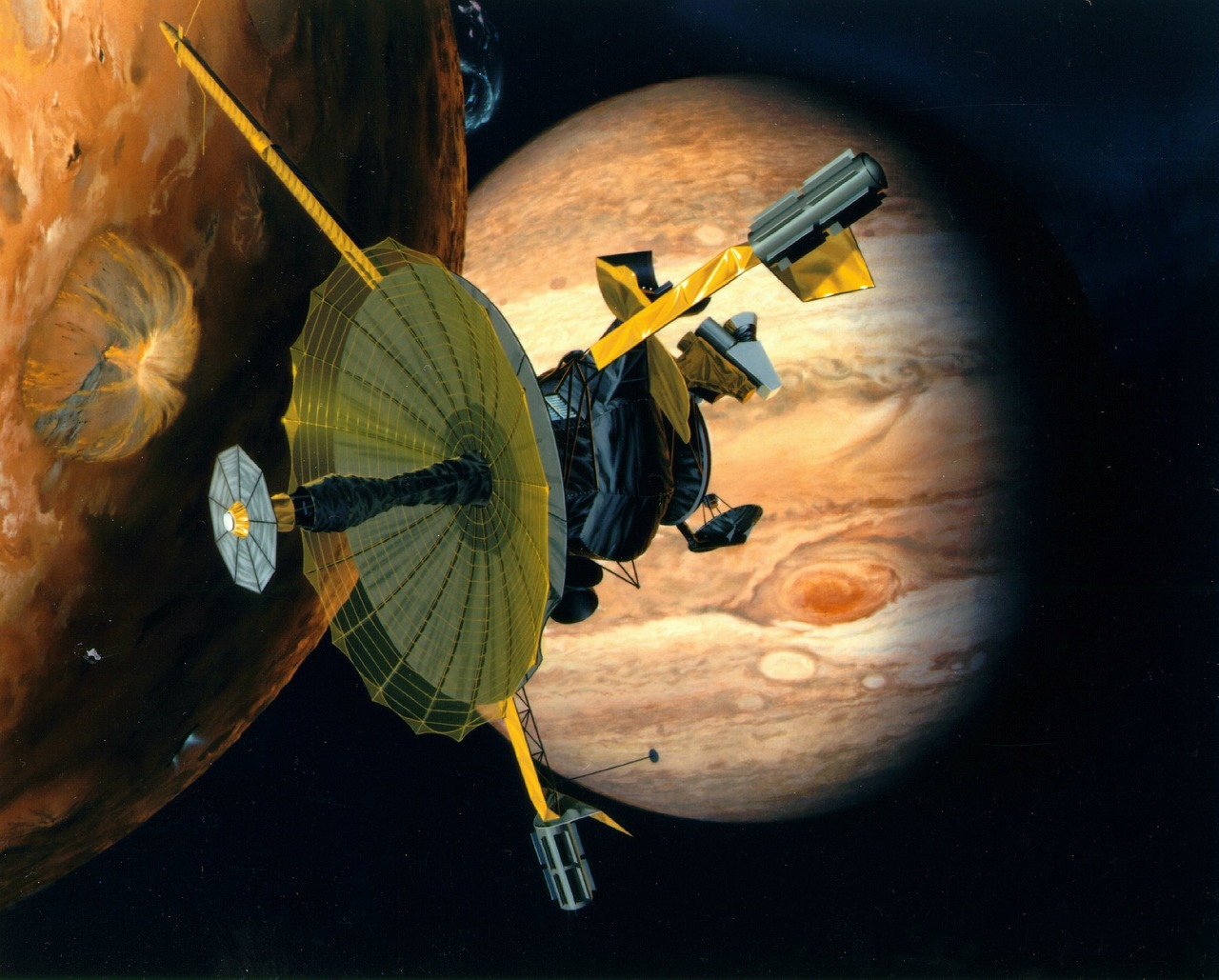

Military applications of quantum computing are highly sensitive and strategic, including secure communications via quantum key distribution and advanced cryptanalysis. Quantum sensors offer unprecedented precision in navigation and detection, transforming defense capabilities. As nations race to develop quantum-resistant encryption, the geopolitical landscape may shift, emphasizing the importance of technological sovereignty and security.

Governance & Law

Legal frameworks are struggling to keep pace with the rapid evolution of quantum technology. Regulatory bodies are exploring standards for quantum security, data protection, and intellectual property rights. International collaborations and treaties may emerge to govern the ethical development and deployment of quantum systems, emphasizing transparency, safety, and equitable access.

Archaeology & Sources

Historical sources on the theoretical foundations of quantum mechanics date back to the early 20th century, featuring seminal papers by Einstein, Schrödinger, Heisenberg, and Dirac. Modern quantum computing research draws upon a vast array of scientific literature, patents, and institutional reports. Archival data from government agencies, research labs, and industry consortia provide a comprehensive view of technological evolution over the past decades.

Timeline

- 1981 - Richard Feynman proposes the concept of a quantum computer.

- 1994 - Peter Shor develops quantum algorithms for factoring large numbers.

- 2001 - IBM and Stanford demonstrate the first programming of a simple quantum computer.

- 2011 - D-Wave claims to have built a quantum annealer approaching usable scale.

- 2019 - Google claims quantum supremacy with a 53-qubit processor performing a specific task faster than classical computers.

- 2023 - Major breakthroughs in error correction and qubit stability decrease barriers to commercial quantum computing.

Debates & Controversies

Despite rapid progress, debates persist regarding the true readiness and scalability of quantum computers. Critics question whether current demonstrations genuinely achieve quantum supremacy or if they are tailored experiments with limited practical relevance. Ethical considerations include the potential for quantum-enabled breaking of encryption, threatening data security worldwide. Discussions about the window of opportunity for quantum advantage and the resource intensity of building large-scale quantum systems remain active.

Conclusion

As we stand on the cusp of this quantum revolution, the unlocking of quantum computing’s secrets promises to reshape every facet of modern life—from healthcare and finance to national security and philosophical inquiry. While challenges persist, the relentless pursuit of understanding and harnessing quantum phenomena continues to drive innovation. The journey from theoretical framework to practical reality is well underway, heralding a future where the mysteries of the quantum world translate into breakthroughs that redefine human progress.